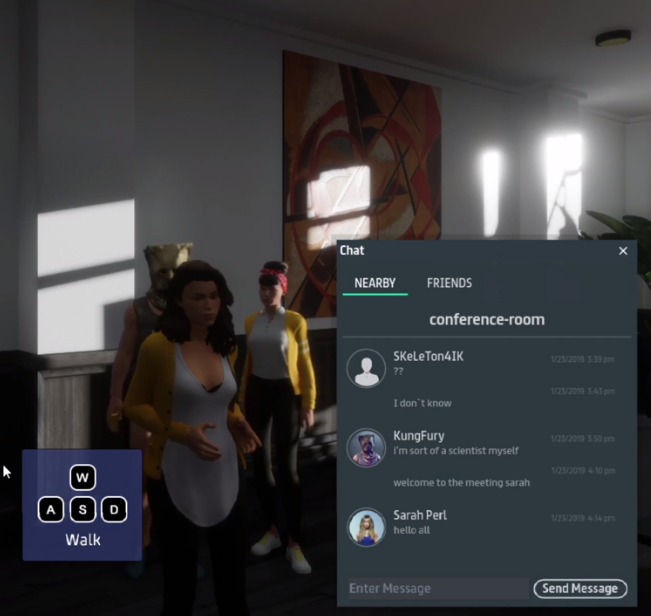

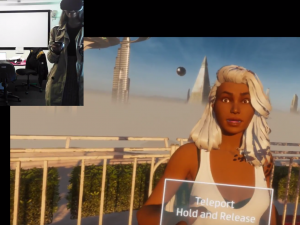

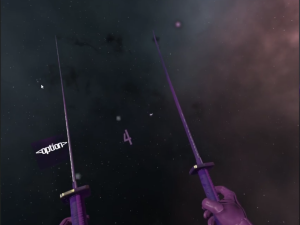

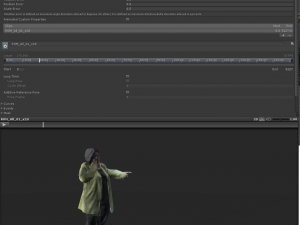

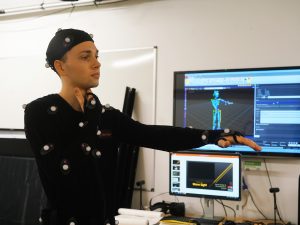

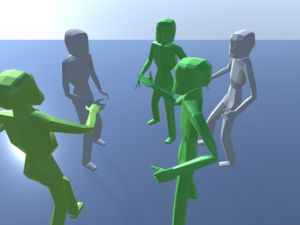

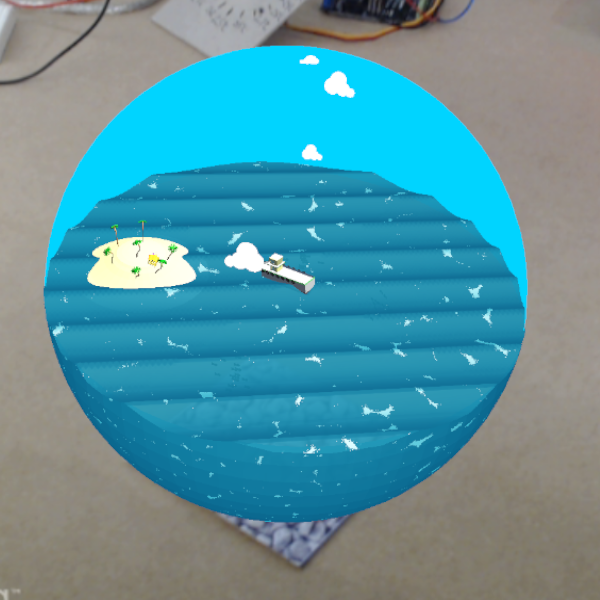

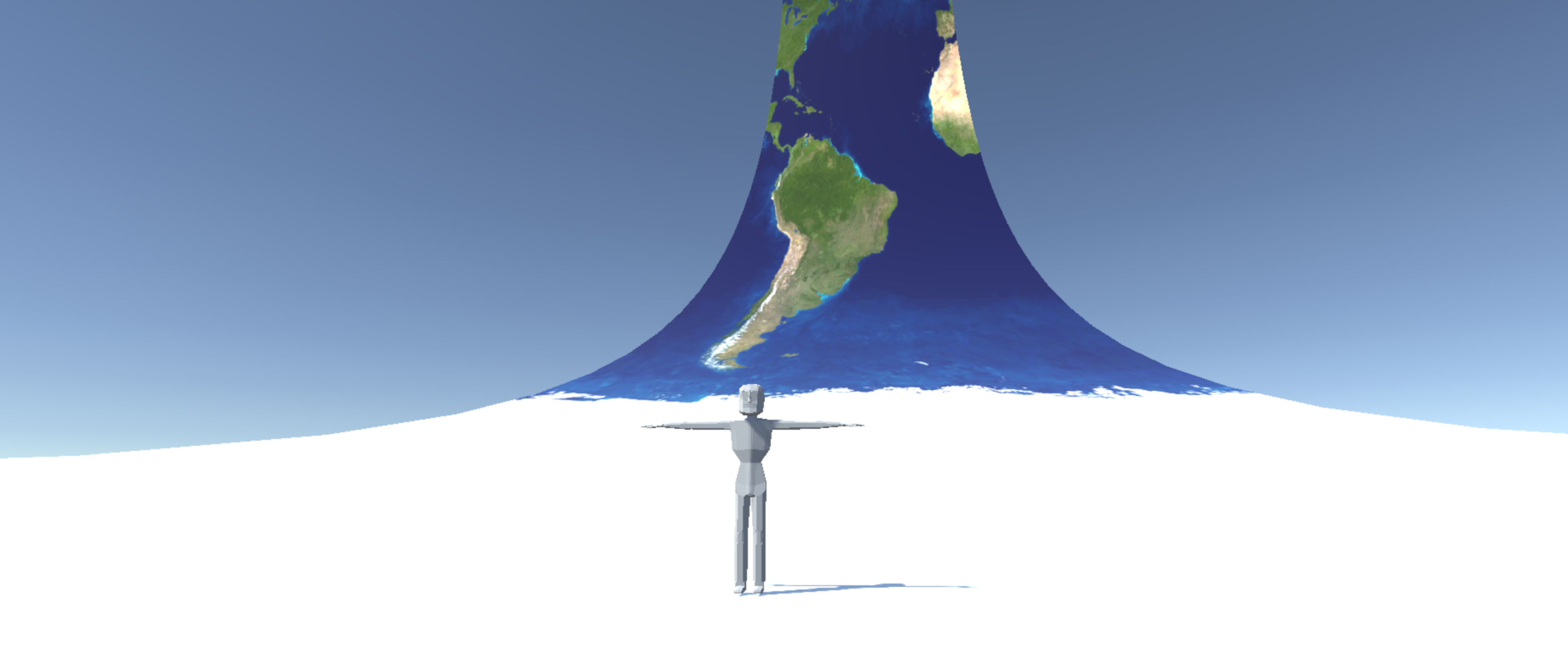

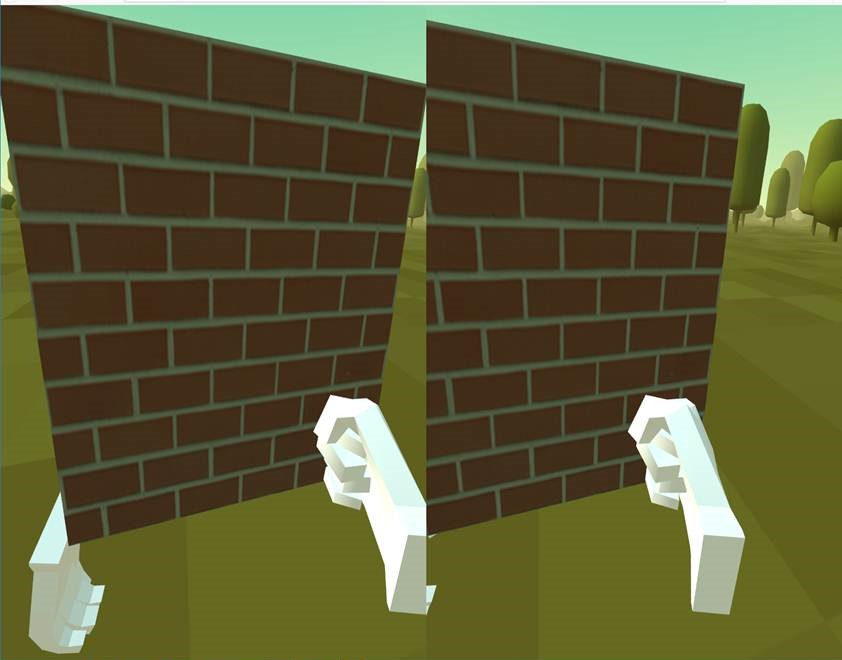

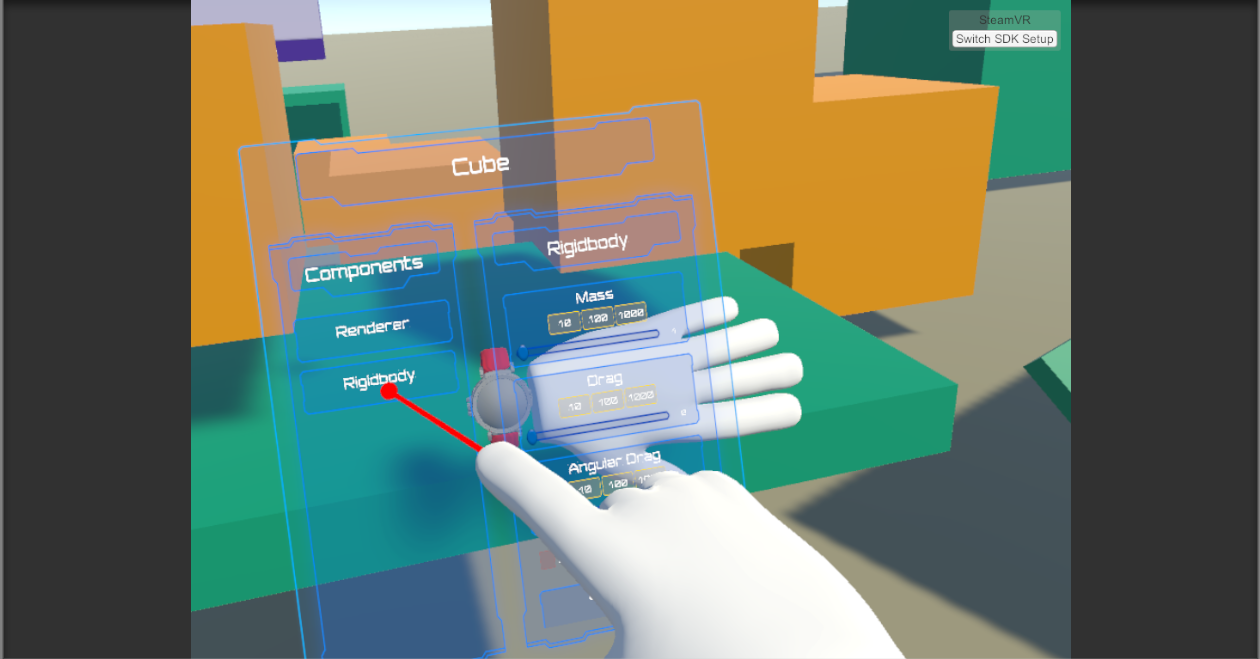

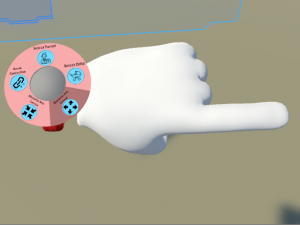

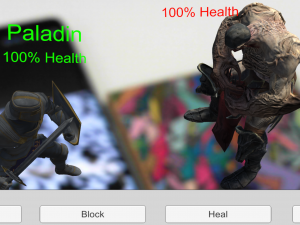

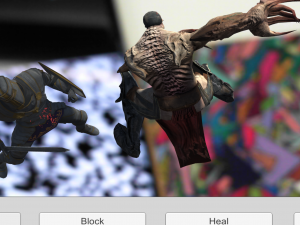

This project wants to explore how virtual reality may be implemented over the web by creating a networked environment where multiple users can interact with one another virtually. Its aim is to be able to create a virtual environment in which people can access directly through an html link without the need to install any additional plugins or software, by either a smartphone/tablet, PC or a virtual reality headset. Currently in the project, implementation of basic interaction has been completed, such as picking up objects or resizing them, as well as having those actions synchronised in real time between all users.

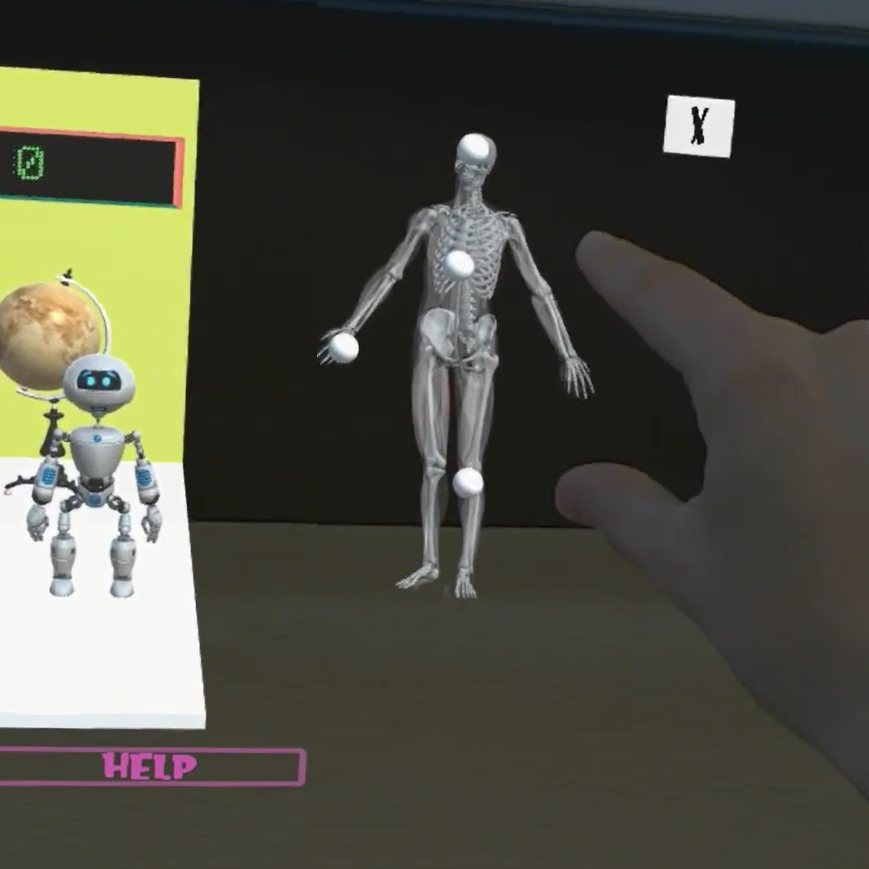

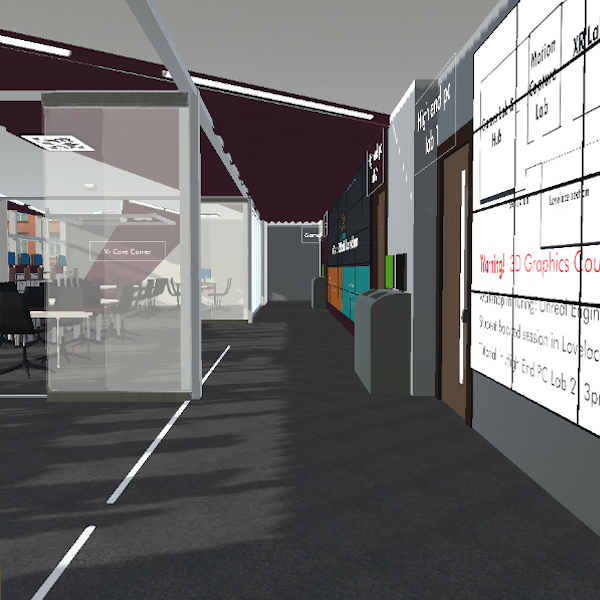

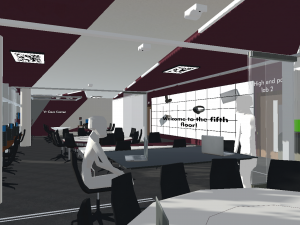

This project is at its early stage, but there are many future features that can be implemented such as audio/video communication, to allow effective and enhanced communication amongst users; have it hosted on a server where it can be accessed anywhere through a weblink, as currently it works only on a local machine server; use it as an educational platform where simple tutorials can be implemented to allow students to complete exercises through virtual reality, allowing it easy to learn/remember lessons etc.